FORM FOLLOWS COMPUTATIONParametric modeling with PARAMAby Stefan Hechenberger

Foreword

Foreword"Form follows computation" captures a present phenomenon: Methodical overspill of programming is giving rise to a distinct aesthetic sensibility. The way we understand shape and space by computational means represents a paradigmatic shift in many design-related fields. "Computational means," implies an action medium that departs from mere graphical and physical representations. It allows us to freely express adaptive models of a design that are unusually pliable and polyvalent--one expression of the design can represent multiple avenues to the design objectives. In such generalized or parameterized designs the terms by which adaptation can take place become part of the design expression. The ability to express these latent adaptations is fundamentally aligned with languages that are comprehendible by both humans and computers. Many programming languages achieve such a quality and some are especially apt to describing the ideas behind a design. Not their precise and deterministic applications but "...poorly understood and sloppily-formulated ideas" [Minsky] is their key objective. They facilitate the development of mental concepts rather than the concepts' mere implementation. The computer's role in the design process has notoriously been one of efficiency. The common sales pitch praises tool x to accelerate work by y times increasing profit margins by z percent. This is close to missing the point because it neglects the importance of a conceptional approach. The ability to make conceptual leaps that are not directly prescribed or anticipated by the production environment is typical for good design. The way computers are used in the design process should facilitate paradigm shifts. Solely increasing efficiency is hardly a matter of conception but one of optimization. Optimizing poor design strategies seems fundamentally wrong and happens far too often. In that sense I believe that it is only marginally interesting to talk about the use of computers for mere optimization. On the other hand, when we use computational approaches to describe the design and then also to describe how these descriptions are made we fundamentally change our aesthetic sense. "Form follows computation" epitomizes the emergence of an open field. It is not the conclusion but the initial word. It assumes programming as a way of thinking what has been unthinkable--not because humans should adapt to the rigidity of computation but because computation allows a new level of vagueness. This is contrary to common notions of computation. My conviction is that the "programming of form" in unprecedented poetic, abstract, self-contradicting, poorly defined, open ended, lyrical ways, is a matter of approach. Once the medium is mastered, its reputation will dramatically shift to a point where it will be known for its expressive charisma rather than for its mechanistic quality. Formal DescriptionPARAMA is a language-based parametric modeler for describing and developing shape and form from vague and poorly defined ideas. It is directly applicable in architecture, industrial design, spatial arts, and other design-related fields. PARAMA is based on the assumption that sketching out ideas by programming is possible and, within the right programming environment, can also be done in an effective manner. In part this requires an environment that is conducive to a quick and spontaneous working pace, and in part this environment has to make ideas that are not fully understood describable. Paper and pencil is a great design tools because of these two qualities. Pencil sketches are quick, and little understood areas of the design can be drawn as overlaying approximations of varying instances. As the design becomes more complex, the quick working pace is likely to diminish. Redrawing a complex three-dimensional object because the last incarnation got too convoluted is often a laborious process. Rhapsodic expressions of ideas become repetitive drawing exercises that interrupt or even inhibit the train of thought. Rather than sketching a graphical representation of the idea (either by pencil or drafting software) one might instead use a special language to describe the conceptual underpinnings. If such a description is expressed in a language that is also understood by computers than the creation of a physical representation (concretion) is effectively freed from any laborious, repetitive activity. More importantly, a codified idea introduces new ways of tweaking and adaptation to the design process. Not only can one postulate how a design adapts under certain conditions, but reformulations of the idea are most natural. Design ideas expressed with PARAMA do not have to be explicit but may include undefined design aspects. Such gray zones are only defined by their boundaries, not by their specifics. Two intersecting cuboids may have explicit dimensions but their intersection may only be defined by boundary values. A typical gray zone definition may postulate that the overlap has to be between zero and seventy percent along both x-axis and z-axis (and locked to fifty percent overlap along the y-axis). Any subsequent tweaking of the design will not transgress these boundaries. Based on this notion of two overlapping cuboids, PARAMA can then be asked to sample specific manifestations (concretions). The resolution by which PARAMA permutes over the design domain determines a finite number of concretions--in this case graphical representations:

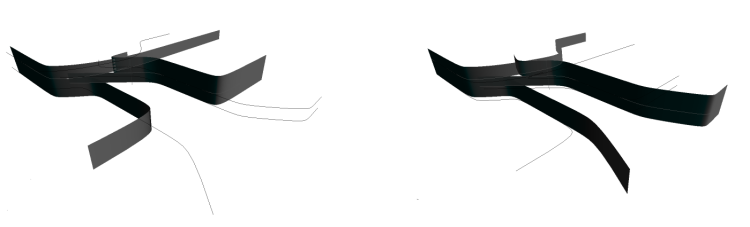

A design description with gray zone definitions may also be called a "parameterized design." The variability of the design is represented by a set of parameters. In the process of generating a concretion each parameter is mapped to a specific value resulting in a value set. These particular values are then used to concretize the "vaguely defined" aspects of the design idea. Associative RelationshipsIncorporating multiple interdependent parameters unleashes the true potential of parametric modeling. From a practical point of view this allows fundamental changes in the design to be propagated throughout subsequent design decisions. In "FAB" [Gershenfeld, 127], Neil Gershenfeld exemplifies this concept with parametrically defined bolts. If larger bolts are needed to hold an object together the value that represents the diameter can simply be increased. In such cases, an associative relationship can be used to "automatically" adapt the holes around the bolts. Elaborating on the cuboid example, an additional third cuboid may assume its dimensions from the overlapping percentage of the other cubes. Whenever the overlap changes, the third cuboid changes its width accordingly. The terms under which the width is deduced from the overlap can be based on any kind of mapping and does not have to be a direct transcription (e.g. it can be inversely mapped or divided by two). Complex hierarchical and circular relationships can be built that go beyond the sole reduction of repetitive work. More often than not, associative relationships lead to unanticipated results. Adhering to the fundamentals of complex systems the specific outcome is unpredictable but the general trajectory can be controlled (predicted). This phenomenon is congruent with the complex patterns generated by the simple rules of cellular automata (a fundamental concept in the complexity sciences). Controlling complexity and assuring that the trajectory falls within the design objectives by adjusting associative relationships is one of the characteristics in parametric modeling and in PARAMA. Because of the difficulty of assessing causal effects from the established relationships, this activity can have a strong emphasis on intuitive decisions rather than deliberate rule expression. A more general way of describing the concept of associative relationships is by the terms of simulation. By establishing associative relationships one creates rules that describe how to construct the object in question. From this vantage point, the simulation setting is the description of the design idea and the values that are applied to the design are the initial condition of the simulation. Just as the simulation of an Icelandic glacier might be the source of new knowledge in the area of geophysics, the simulation of spatial design might lead to a new understanding of architectural form. [Simulation as a Source of New Knowledge, Simon, 14] In the development of PARAMA I used pseudo-architectonic objects to fathom language (API) requirements that allow a high latitude of parametric expression. Of particular interest was the idea in which an interrelated set of angles is used throughout the design. Changing one angle affects all others in ways that still comply with the original design intension:

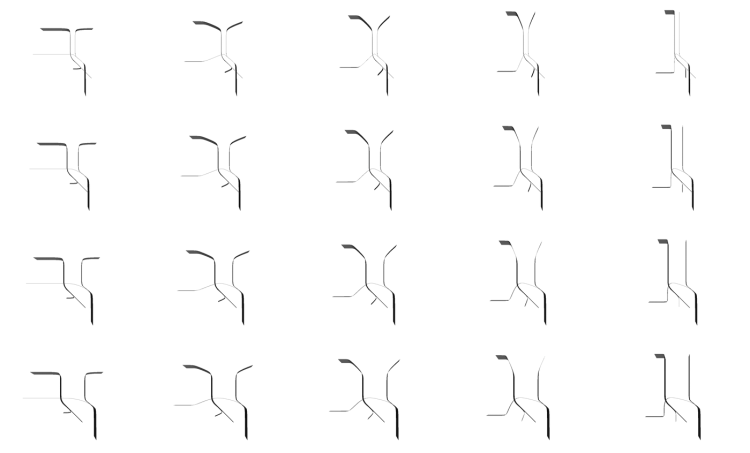

In addition to these harmonized angles, this design idea incorporates another "parameterized features," namely a variable center pathway. Permuting over these two parameters (as two parameters can conveniently be presented in a 2D medium) supplies some insight into the design domain as it is projected by the underlying codified idea:

The vertical axis represents the spectrum from minimal to maximal center pathway width. The horizontal axis represents the transition from minimum to maximum angle degree. Top left and bottom right concretions represent the minimum and maximum of both parameters while the concretions in between represent transitional combinations of them. TechnicalitiesFrom a practical point of view, PARAMA is a language for postulating design domains and a graphical interface that communicates derived concretions to its users. Technically, this is accomplished by complementing a general purpose programming language (Python) with a language extension (API) that is specific to describing and presenting parametric form. Such a "metalinguistic abstraction" [Abelson, Sussman, ch.4] is fundamentally a proposed way of thinking about parametric form. Key concepts such as ParamSet, ValueSet, Idea, and Concretions are helpful in terms of natural language as well as in programmatic language. In PARAMA's code, these concepts are represented by class definition and can be used to the fullest extent of object oriented programming strategies:

How to deliver an Idea"Most people have been indoctrinated by the orthogonal hegemony [...] they think space is being constructed by Diophantine coordinates. [...] the structure of space has very little to do with right angles." [Bram Cohen, creator of BitTorrent] For the most part, ideas do not comply with our physical (Cartesian) space and manifesting them there is not trivial. In part this is difficult because we need to find appropriate terms of expression in the target medium and in part such translations almost always require a change of dimensionality. Mental conceptions tend to have a polyvalent quality that prevents them from being directly mapped to the two to three dimensions of most of our media. The simple act of thinking about a three dimensional object hardly produces one specific result, but brings to mind a multitude of objects that are associated with the mental condition that represents the idea. A mapping to Cartesian space requires at the very least some sort of selection process to occur and more likely a kind of iterative spiraling and continuous interaction with the target medium. The profound efforts that go into describing ideas by code are only justifiable by the indifference of programming to "dimensional requirements." This indifference provides grounds for a similar kind of polyvalence as in the mental origin. The terms by which the idea is expressed is still reliant on significant translation but the multi-dimensionality of mental ideas can better be preserved. Mathematically, parameters are fundamentally the same as Cartesian dimensions. Adding an additional parameter adds a new dimension. The intended way of delivering an idea with PARAMA is via a custom graphical user interface (GUI). The designer describes the idea, packages it as an application, and sends it to whoever is interested. This is necessary because parameterized or multi-dimensional designs cannot be depicted on a two-dimensional or three-dimensional display per se. The obvious trick is to spread out the remaining dimensions over time. Just as three-dimensional models can be conveyed on a two-dimensional display by changing the vantage point over time, any additional parameter can be "depicted" over time. One notable side effect of this strategy is that the time required to fully "depict" a multi-dimensional model increases exponentially with the number of parameters. The more parameters a model has, the more it loses the characteristics of a concrete design and acquires the characteristics of a design domain. This term implies that parametric deviations can be "seen" by navigating through an open field, which is what PARAMA's graphical interface facilitates. ContextPARAMA grew out of a conceptional framework that fosters transvergent production models. In this model, a theory can be a product [C5], product design can be art, and design is a given. Creating the artifact is a process of embedding the product in a cultural context. Questions of functionality or necessity are often subordinate to questions of how distant cultural aspects are set in relation and how meaning is created from these new correlations. While PARAMA has a clear functional goal and a practical relevance, it also demarcates an intricate position at the intersection of product fabrication, architecture, industrial design, linguistics and art. In an eclectic fashion, the following sections lay out points of contact within these areas. Personal FabricationAs we are finding a consensus on the antipathy of Ford's production model of the assembly line and we are seeing personal fabricators on the horizon, we are also departing from a strictly repetitive mass production model. The subsequent alternative is a production model that accounts for personalization and adaptation. The idea that uncountable numbers of the same products are globally appropriate for all of us is only viable as long as adapted solutions have a significant economic disadvantage. At the current state of affairs, personal fabrication is unnecessarily dormant. "The biggest impediment to personal fabrication is not technical; it's already possible to effectively do it." [Gershenfeld, 16] Gershenfeld further indicates that increasing affordability will eventually foster public initiative for broad adaptation. For design-oriented disciplines that rely on mass produced items this pegs the question how art production and product design will change with the shift to personal fab culture. Most current design approaches are geared towards a final product. An artifact that has to account for adaptation requires a different design methodology than one that has a concrete output. It requires the designer to complement the design with conditional readiness that unfolds under certain eventualities or requirements. Adding adaptability to the design expression requires appropriate means for describing these latent adaptations. These also might not manifest as simple add-ons, but are more likely to induce a different approach. The general idea of parametric modeling addresses the need of variable design but still leaves one unclear about how adaptive design expressions are made. Standard graphical user interfaces are successful to some degree but tend to impose rigid structures on the design process which can be problematic if the process is not yet fully understood. On the other hand, programming allows for a high latitude of expression and is know to be especially well suited for controlling complexity. Two reasons that make designing by programming attractive. Programming LanguagesAn understanding of the nature of computation seems to be critical for "programming form." A common pitfall is to evaluate the act of programming by what is most commonly produced with it. Main stream computing is characterized by controlling software--flight traffic control, bank transaction, internet communications, product tracking and the like. While being sophisticated constructs in their own respect they do not sufficiently represent their conceptional foundation. The stereotypical image they propagate is that of mechanistic rigidity. Systems that are engineered by armies of formula-adoring computer scientists--each of them trained to design precise infrastructures that do what the program prescribes. This is an accepted image within the field. Total precision is seen as something practically attainable once a finite number of bugs have been fixed. This view gets effectively stirred up when seminal figures within the discipline note that "Computer Science is no more about computers than astronomy is about telescopes." [Dijkstra] or "...the programmer begins to lose track of internal details and can no longer predict what will happen..." [Minsky] Both of these statements suggest a different nature of computation. The latter is from a paper that lays out how programming can be used to express poorly understood ideas. Profoundly, it lays the groundwork for an understanding of computation as a medium for human thought. Minsky explains the emphasis on rigid use of computing by pointing out that it takes tremendous "technical, intellectual and aesthetic" skill for flexible use of computing. Non-formulaic programming is a matter of expertise "just as a writer [of natural languages] will need some skill to express just a certain degree of ambiguity." The field of computer science has roughly produced a total of 2500 languages [Kinnersley]. In consideration of this fact it is hard to despise the linguistic orientation of computing technologies. For PARAMA it is this language-centric quality of computational systems that is of relevance. The computer revolution is to a large extent a language revolution that is allowing us to think in new ways. Disregarded by common notion, computing languages are primarily developed for humans. "Programs must be written for people to read, and only incidentally for machines to execute." [Abelson, Sussman, preface] This is an important consideration for any further discourse on the nature of computational means. Once we accept that language design and language usage in computer science has very little to do with circuit board engineering or technical concerns in general, we can gain a better understanding of computation. The conviction that the "most fundamental idea in programming" is the usage and creation of new languages [Abelson, Sussman] also represents the fundamental understanding for PARAMA. From this point of view, popular dualities like art/science, emotional/rational, rigid/flexible, are irrelevant for programming, or, to appropriate a quote by Edsger Dijkstra, are "as relevant as the question of whether Submarines Can Swim" [Dijkstra]. Language is empirically tied to human thought and the fact that it might also be executed by a computer is additional capacity, not conceptual reorientation. For creative expression, programming languages are generally avoided and substituted for graphical user interfaces (GUIs). This strategy is based on usability and acceptability concerns associated with most programming languages. But counter to a common assumption, point-and-click interfaces do not necessarily make tasks simpler for users that are fluent in the underlying programming interface. This is especially true for highly complex systems. Systems that consist of ten million lines of code upwards such as mainstream operating systems have all been developed by writing text rather than by clicking graphical objects. In fact, software itself is hardly ever created with GUIs. For the same reason, embarking on point-and-click for expressing ideas in the field of philosophy sounds absurd. The latitude of expression associated with discipline-specific lingo seems absolutely essential. My suspicion for programming languages' unmatched ability to control complexity results from the following reasons: As with natural languages, programming languages allow for the aggregation of knowledge under symbolic abstractions. Both have powerful mechanisms for adjusting the level of abstraction, and both are highly optimized to control the generality versus specificity of propositions. But unlike in natural languages these symbolic abstractions can be made on the fly while programming. Symbolic proxies are fully functional from the time they are expressed, while in the context of natural language they need to be agreed on before they can effectively be used. The act of programming is usage and creation of language at practically the same time. Design by SoftwareComputer-based design manifests in uncountable and diverse ways. Software mediates between the hardware and the abstraction layer that was chosen as the terms by which to express the design. For this abstraction layer, one of the most classifying factors is the knowledge it can hold. From low level to high level, this suggests a spectrum by which most design software can be characterized. Can it only hold specifics on how lines and surface patches are positioned, or can it be "aware" of how geometric primitives connect and intersect? Does it only "know" how doors and walls relate, or does it know how to apply viable proportions of a specific architectural style? The spectrum extremes could be termed "drafting" (low level) and "synthesizing" (high level). The former implies transferring very little knowledge that goes beyond explicit representation. The latter implies omitting specifics and describing what has to be known to generate representations (concretions). In most cases this also implies added parametric variability that is capable of generating multiple results. A design description that results in only one physical or graphical representation is effectually a drafted one. In "Architecture's New Media," [Kalay, 70], Yehuda Kalay uses a similar classification scheme. For him the decisive factor is whether the design software handles "objects" or solely "shapes." He attributes doors, windows, columns, and stairs to "objects" while polygons, solids, NURBS, and blobs to "shapes." Relevant to his classification is whether the software has an understanding of what is being drawn. If the software knows that a certain shape is a roof, it might be able to assist designers in its usage and alert them when turned vertical (analysis). The software might also be able to modify the roof within the constraints of a generalized roof design (synthesis). When the software is simply confronted with shapes, no analytic or synthetic assistance can occur and from the software's point of view, the expression is a matter of positioning geometric primitives. In terms of chronological succession, Kalay makes the important observation that design software can be divided into three generations. Surprisingly, first and third generation software coincide with the "smart" approach while the second generation is primarily conceived as drafting tools. Early pioneers of the field have been aware of the synthetic/parametric potential, were overrun by the easy applicability of drafting software, and presently have their vision of a computationally rich approach rediscovered and further pursued.

Like computer-based design in general, strategies for establishing parametric variability in the design are manifold. "Avant-grade Techniques in contemporary Design" [De Luca, Nardini, 18] names the algorithmic parametric, the variational, and the Artificial Intelligence (AI) approach as fundamental categories. The former two are widely congruent with PARAMA's central ideas. Language-based parametric modelers are by the nature of most programming languages algorithmic. When they, like PARAMA, also include mechanisms for spelling out constraints, objectives, and permutation resolutions, they also comply with the variational approach. AI is possible in a framework like PARAMA but is not specifically endorsed. The main reason for eschewing this approach is its dependency on computer-based evaluation of concretions. Functionally this is feasible, but stylistically this is highly problematic. Implementing AI that is capable of assessing the cultural contexts from which the design acquires meaning proves extremely difficult. Kalay uses a different categorization scheme and also embarks on a different term to address the whole topic: "synthetic" instead of "parametric." Depending on the vantage point, one term seems to become a subcategory of the other. Independent of this hierarchic relationship, they embody the same core ideas of computational design. Kalay categorizes computational design approaches by whether they use "procedural," "heuristic," or "evolutionary" methods. The latter two overlap with De Luca's and Nardini's AI category as they require an evaluative instance. For the aforementioned difficulty of teaching computers cultural correlations, neither are considered in PARAMA. Unfortunately, Kalay's remaining category (procedural) falls short of capturing the expressive flexibility of PARAMAesque frameworks, as he does not distance formulaic rigidity from programmatic design. Linguistic ConsiderationsWhen media theoretician Friedrich Kittler was confronted with the popularly assumed crisis of literature, he objected the claim by suggesting a different weighting: "I can show you infinite amounts of text ... it makes 'nice' images and sounds ... I generate with literature the opposite [rich media]" [Kittler]. This captures Kittler's assumption that the act of writing computer code assumes a similar cultural locus as writing novels or research papers. From this viewpoint, literature is far from crisis and unequivocally blossoming. Demonstrated by open source software communities, millions of people collaborate in hundreds of thousands of projects to express ideas in written text [Sourceforge]. In most of these projects, a programming language assumes the dominant means of conveying ideas. Inferring from the Sapir-Whorf hypothesis, adapting to a different language fundamentally impacts the human condition. "We see and hear and otherwise experience very largely as we do because the language habits of our community predispose certain choices of interpretation" [Sapir, 69]. Associated with this hypothesis are the principles of linguistic relativity and linguistic determinism. The former addresses the influence of language on our perception of the world, and the latter, language's direct influence on human thought. According to these two concepts, switching from one language to another has immediate consequences on the trajectory of thought and how the world is mentally constructed. In its most extreme interpretation, the Sapir-Whorf hypothesis would predict a fundamentally different way of thinking for communities that use a certain programming language as their primary means of exchanging ideas. With these different thought processes, they would interpret their cultural landscape along these computational means. In stark contrast to the above intellectual doctrine is the idea of linguistic universalism. Linguists like Noam Chomsky or Steven Pinker cultivate a profound body of theory in support of an innate language ability that, in the form of 4,000 to 6,000 natural languages, surfaces in different variations [Pinker, 232]. The line of argument is split into two parts. Firstly, natural languages are not fundamentally different from each other. All studied natural languages, coexisting and isolated, exhibit structural similarities. This premise emerged from comparative linguistics through the identification of universally applicable grammatical features. "[...] literally hundreds of universal patterns have been documented. Some hold absolutely" [Pinker, 234]. Secondly, this observed universalism is little acculturated, but a genetic feature that was brought forward by the adaptations of human evolution. "The crux of the argument is that complex language is universal because children actually reinvent it, generation after generation" [Pinker, 20]. To prove genetic predisposition, Pinker presents different situations in which "people create complex languages from scratch" which are suspiciously similar to existing ones. Historically, this can be reconstructed when people with different languages become isolated as a group. In these communities they always developed a "makeshift jargon called pidgin." Pidgin is a simple language void of most grammatical resources (no consistent word order, no prefixes or suffixes, no tense or other temporal and logic markers, no structure more complex than a simple clause). From this simple jargon, the first generation of children spontaneously develops a grammatically rich language (creole) that is comparable to culturally established languages. This impressive reinvention of language can also be observed when deaf children that were never exposed to complex language create their own grammatically rich sign language. If universal features can be observed, and these features are developed without acculturation, then, so the argument goes, a language predisposition or universal language must be inborn. Kittler's recommendation for consolidating the conceptual frameworks of natural and computational languages expose programming to the aforementioned conflicting theories. As the conceptual terrain becomes increasingly saturated by these two antithetical positions I will impromptu-engage the issue on a different level. Grammar might not be inborn but a facet of our environment. Analog to the complex path of an ant that is predominantly induced by the landscape rather than the ant's brain [Simon, 51], the phenomenon of a universal grammar might primarily be a reflection of our environment. The genetic predisposition might function on a more elementary level. Evolution might only have provided us with a simple but highly attuned urge to recognize and communicate patterns. This, combined with our brain's neurological capability, might in fact lead to similar patterns in our language, mostly unaffected from where we are on the planet and independently from how we acquire it. Programming languages, or for the sake of broadening the argument any kind of artistic expression, supply an indication for the validity of this premise. The millions of people who use computational languages have acquired a language skill with, depending on the particular language, arbitrary grammatical features. In discordance with the notion of an innate universal grammar, the human urge might only long for the ability to control complexity by combining "primitive elements to form compound objects" and "abstract compound objects to form higher-level building blocks." [Abelson, Sussman, ch.4]. If a medium of expression provides such qualities it is pursued. "[We] can't help it." [Pinker, 20] The only remaining difference between natural language and general artistic expression is that we are not born with a brush, pencil, chisel, or notebook, but with a vocal organ. Difference to Semiotics/StructuralismPARAMA's approach of programmatic design uses language to describe design ideas. In a seemingly similar way, postmodern practice has an affinity for describing "designs" as "linguistic systems." Central to postmodernity is the concept that "everything, from fashion to visual art, could be interpreted as a wordless language" [Ibelings, 14]. Interpretation of artifacts is done by linguistic means. Syntagmatic and paradigmatic structures are the subject of analysis. Grammars define styles and every identifiable building block or sign is thought to reference another aspect of our culture. Signs signify, and the signified is inseparable from the signifier. Along with literal meaning (denotation) comes involuntary meaning (connotation) that any signifier acquires as it is being used to communicate. Meaning shifts as connotations change over time and reading becomes an act of writing if the connotations are purposefully reformulated. Accordingly, deducing meaning from the author's intention is an intentional fallacy [Wimsatt, Beardsley]. Followed to the extreme, context is everything. [Chandler] In architecture, as in most other design fields, this led to a profound transition. The modernist indifference to location was abandoned for an architecture of allusion. "Allusion--especially to context--was one of the most frequently used means of legitimizing architecture" [Ibelings, 18]. Seminal figures like Charles Jencks and François Loytard made the above school of thought a school of style. The computational design approach, as it is the topic for this text, and the semiotic school of thought of postmodern design do relate but are dissimilar activities. The former is synthetic while the latter is analytic. Artifacts that have a computational production agenda are subject to semiotic criticism independently of how they were realized. Correlation between the semiotic interpretation and the language-based construction might exist but are not obvious to me at the moment of writing this text. One possible suspicion is that the "constructive grammar" bleeds through and becomes a grammar that is also significant in a cultural context. Mark Goulthorpe's Paramorph [dECOi], in which he maps urban activity to architectural form, is an indication for the validity of this hypothesis. Traditional Art and DesignAttributing computational artifacts with a fundamentally new approach is problematic. Any cultural progression is characterized by a repurposing of previous styles and schools of thought. In the case of computational design in general, and PARAMA in specific, this holds true just as it holds true that Surrealism was influenced by Dada. Jean Arp's concept of the "concretion" is especially applicable to how ideas are cultivated with PARAMA. Arp thought about his sculptures as derivatives from mental models. He understood mental models as intimate organic constructs that grow like "a child in its mother's womb." Making concretions manifest in the physical world is a "process of crystallization." In PARAMA, ideas are crystallized in a similar fashion. One noticeable difference is an additional step. The "child" is first born in the activity of expressing the codified idea and is crystallized by running the program. With this difference, the creative involvement also shifts to this additional step and the process of manifesting the idea in Cartesian space assumes a subordinate role. On a different scale, the International Typographic Style has been exploring issues that are pertinent to computational design. Anton Stankowski, Rudolph deHarak, Dietmar Winkler and other practitioners of the former worked with a relatively rigid "syntax" to express ideas. Supposedly, it is this similarity that leads to a recognizable resemblance of the International Style in many computationally expressed designs. For both, this rigid syntax suggests a formulaic rule system by which the design is projected. This exposed the International Style to extensive criticism--criticism that is in tandem to the rational/intuitional polarization. For computational design this criticism is less problematic because it can easily be demonstrated that rules may be layered in ways to create complexities which deemphasize the underlying algorithmic nature. In the field of architecture "habitual design rule systems are as old as Vitruvius's De architectura (28 BC), Alberti's De re aedificatoria (printed 1485), Vignola's Regola delli cinque ordini d'architettura (1562), and Palladio's I quattro libri dell'architettura (1570)" [Kalay, 267]. They all state rules in natural language (Latin or Italian) and architects can offhandedly apply them in the architectural design process. The rules postulated with PARAMAesque systems could theoretically be applied in this manual fashion but are generally not subject to further human interpretation. Their acute machine interpretation and the ability to sample arbitrary numbers of concretions shift the creative involvement away from interpreting the rules to manipulating them directly. Aforementioned natural language rule systems have been deduced from existing desirable architecture to supply guidelines for architecture to come. Vitruvius, for instance, extensively based his guidelines on classical orders. Doric, Ionic, Corinthian, and Tuscan orders have all been widely used before De Architectura [Vitruvius] was written. This is a "conservative" approach, conserving proven style for future endeavors. PARAMA's parametric modeling has an emphasis on exploration. Codified ideas are iteratively reformulated until the projected design domains represent the designer's intensions. References

|